VCDX Skill Storage

Originally posted in September 2015 on vmice.net

Storage skills include skills regarding storage subsystems and common technology features, and then storage networks in general. Here the same principle applies as with the Compute skills, that storage is not even remotely simple anymore since the old large RAID level volumes are slowly fading from existence. Now you have AFA’s, Local Flash, Hybrids, Storage Virtualization, VAAI’s, VASA’s, vVOLS and vSANs (plus a lot of others but I fear the post might just consist of a large list of acronyms).

At the core storage subsystems are simple (like servers) running a set of hard drives that belong to a specific group of disks that are most likely protected against single disk failures in some way with a RAID equivalent technology (which is just a way do distribute data so you own at least a single copy of it elsewhere, almost).

Then each vendor has its “all new, much better” caching mechanism within each subsystem with various names and different capabilities. Some of the few I’ve seen are Lenovo Easy Tier, EMC Fast Cache, HP 3Par Adaptive Caching, Hitachi Flash Acceleration etc.

There are so many other storage specific company’s in IT now that it’s getting hard to know each one and how it would related to a specific design. There is a very slim chance of getting asked a question on a specific vendor but the one that was used in the design, you need to be an expert on that one. It is good though to know about the alternatives, even if it’s just knowing what the pros and cons are.

Here is a list of some of the technologies you might come across:

- Good Old Storage

- HP, Hitachi, Lenovo, Dell, NetApp, EMC.

- Please note that the old part does not apply since the caching mechanism and how storage is managed has drastically changed in most arrays.

- Server Based Caching

- Pernixdata, FusionIO

- AFAs

- All the big vendor hafe AFAs (HP, Hitatchi, Lenovo, Dell, NetApp, EMC) and then there are the new ones like Pure Storage, Tintri, Nimble Storage, Violin Memory, Nimbus Data, Tegile and Solidfire (thanks for the socks again guys 🙂)

- Please note some use Flash only, some SSD only or a mix. Same AFA name though. Nothing called ASA out there (yet).

- Hybrids

- Hybrids are just regular storage with both HDDs and SSDs. Dell, EMC, HP, NetApp, Tegile and Lenovo have one.

- Simple Storage-Compute Convergence

- HP StoreVirtual, VMware vSAN

- Simple Storage-Compute HyperConvergence

- Nutanix, Simplivity, Maxta, Pivot3, Gridstore, VMware EVO:Rail and its bigger brother Rack, Yottabyte and Atlantis Computing.

A lot? Yes a lot. How do you select a single vendor from the list to fulfill the business requirements? Not going down that rabbit hole and actually I think that will be my next blog series.

Well how to transfer those IO’s to the storage arrays? There are 3 ways to connect ESXi hosts to storage: iSCSI, NFS and Fiber Channel. These should be known inside and out and why each one was not chosen , pros and cons, and how you would design if you had to select a different storage network protocol.

So a short list of things you should know about storage network protocols:

- NFS and VMware design considerations (JumboFrames, load balancing with NFS v2 etc)(Check out Chris Wahl Blog )

- iSCSI and VMware design considerations (load balancing, performance, security risks etc) (Chris Wahl again)

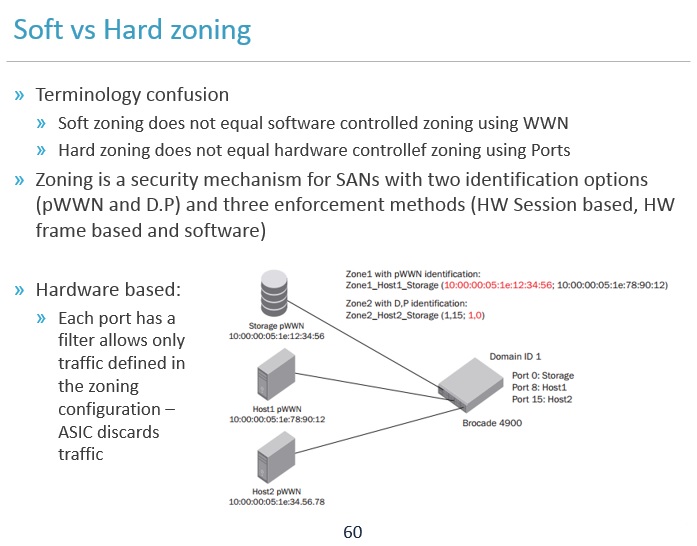

- Fiber Channel and VMware design considerations (“soft” versus “hard” zoning, port buffers, FC topologies, FCoE considerations, Initiator:target zoning, security in FC zoning etc.)

But the selection of a vendor and technology and transfer mode will impact the design qualities in various ways:

- Availability can be impacted by RAID level or similar technology, data redundancy policies, component redundancy etc.

- Manageability can be impacted by management tools like always, learning curve, automation for volume creation, storage network management etc

- Performance can be impacted by RAID levels or similar technology (Write penalties), bandwidth, cache size, cache mechanism, link redundancy and throughput etc.

- Recoverability can be impacted by data redundancy with stretched clusters, replication technologies, data mirroring (async/sync) etc.

- Security can be impacted access control as always, encryption, integrated multitenancy, authentication etc.

I had been working with storage arrays for 4 1/2 years at that time and had spent a lot of time learning about storage technologies and the underlying storage array networks. So I was fairly confident that I had a high skill level for this part. I realized later that I knew less than I thought. Remember the graph…

The things that are very important to know is the technology used in the design at an expert level. And also the underlying storage network used. So if the design included an IBM Storwise solution using fiber channel SAN you need to know everything there is to know about those two specific items.

Here is a deep dive slide from my VCDX deck to show some of the things I covered:

As for a list of common storage knowledge, I used Rene Van Den Bedem list from his blog as a starting point: http://vcdx133.com/2014/04/24/vcdx-study-plan-storage/

Important items of knowledge:

- VMware Storage Capacity Planning (Calculations of IO and Capacity)

- VM Disk Size impact on datastore size

- IO queues (All the queues from the VM to the storage array) (this blog post from Chad Sakac is something you should read multiple times http://virtualgeek.typepad.com/virtual_geek/2009/06/vmware-io-queues-micro-bursting-and-multipathing.html

- RAID levels considerations (Write/Read penalties, all the Raid levels including RAID-DP)

- IO calculations based on Raid levels.

- vSphere Metro Clustering

- Storage Replication

- vSphere stuff like RDM, vSCSIs and considerations (MSCS support, longer queues etc)

As with the other skill updates I created a list of items that I answered and then copied to Quizlet for easy coverage (ps. This blog series is not sponsored by Quizlet).